Are you sure you want to quit the chat?

Implementing artificial intelligence in organisations often sparks a familiar question:

“How much human effort will this replace?”

I hear it in boardrooms and project rooms alike CIOs, CEOs, COOs asking for ROI and capacity. And I hear the quieter version from teams:

“Is my job next?”

Both concerns are valid. But the way we frame the question shapes the entire strategy and the culture that follows.

Because when we ask “Will AI replace humans?” we’re often assuming something that isn’t true.

The hidden assumption

1. What leaders should ask instead

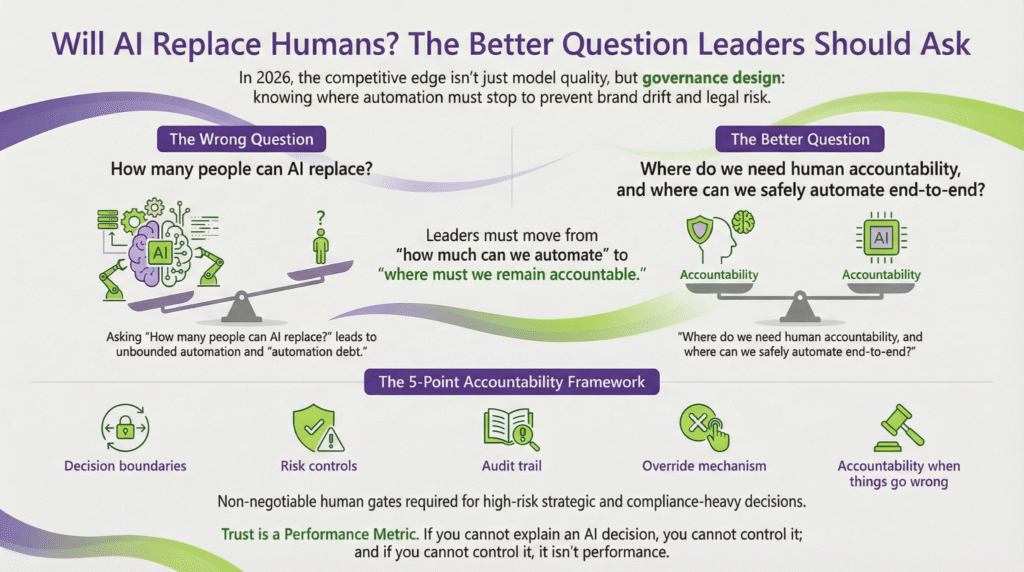

When leaders ask “How many people can this replace?” they’re often measuring AI like a labour saving machine. A better lens is to measure AI like a decision system.

The better question

Instead of “How many people can AI replace?” ask:

“Where do we need human accountability and where can we safely automate end to end?”

Accountability can’t be automated.

Because once AI starts influencing outcomes, someone must own:

Where AI can safely automate

Where removing humans becomes exposure

High stakes workflows look very different:

A simple tier model: speed with trust

One practical way to guide design decisions is to classify workflows by risk and required oversight:

Here are a few practical examples across industries where you can design checks, validate outcomes quickly, and roll back safely when something’s off while keeping human accountability where it matters.

Example A: Large Australian multi contact centre organisation

In one contact centre transformation for a large Australian organisation operating multiple contact centres, the core challenge wasn’t a lack of technology it was fragmentation: multiple CRMs/case systems, multiple carriers/CCaaS platforms, and dispersed customer interaction history.

The key Human in the Loop lesson: even with self service voice AI and automation, we did not remove humans where accountability mattered. Humans remained responsible for:

Example B: Local government / council service delivery (secure internal AI assistant)

Example C: Financial services (onboarding and credit decisions)

If you’re an employee asking “Will AI replace my job?” here’s the best self-check:

The one question to ask yourself

Am I mostly doing repeatable, testable, low risk tasks?

If the honest answer is yes, don’t panic upskill. Because the work won’t disappear; it will shift from doing the task to supervising, improving, validating, and governing the system that does it.

You don’t need to become a data scientist. But you do need to become someone who can:

write better instructions and acceptance criteria

The three modes of modern AI operations

Human out of the loop: automation runs end to end (low risk)

Think aviation: autopilot handles the routine, but pilots remain responsible monitoring systems, managing exceptions, and taking over when risk rises.

So in most enterprises, the direction will be: less “human in the loop” everywhere, more “human on the loop” by default, and almost never “no human accountability.”

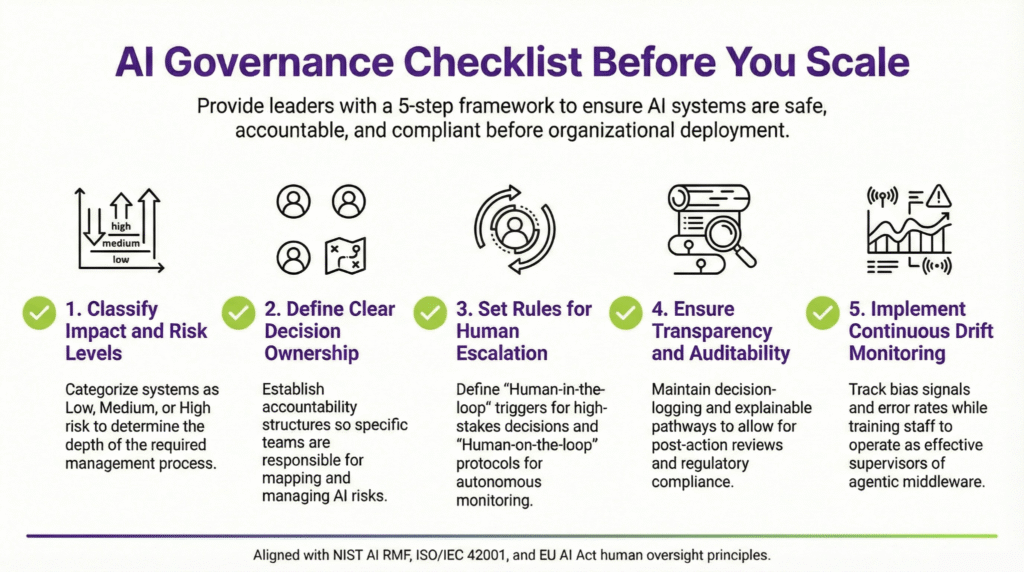

If you’re scaling AI across the business, here’s a practical checklist you can apply to any use case (before you scale it):

This isn’t just “nice to have” it’s increasingly aligned to how governance frameworks and emerging regulation describe safe AI deployment (see NIST AI RMF, ISO/IEC 42001, and the EU AI Act’s emphasis on human oversight).

So, will AI completely remove humans in the loop?

In low risk, repeatable work: yes, increasingly.

In high stakes, high accountability work: no. And it shouldn’t.

The winning organisations won’t be the ones with the most automation. They’ll be the ones with the best governance design: clear accountability, safe escalation, strong auditability, and people who know how to operate AI responsibly.

References / further reading

Stanford HAI A Human Centered Approach to the AI Revolution

Stanford HAI LinkedIn Fei Fei Li on automation misconception

IBM What Are AI Hallucinations?

NIST AI Risk Management Framework 1.0 (NIST.AI.100 1 PDF)

ISO ISO/IEC 42001:2023 (AI management systems)

Standards Australia Spotlight on AS ISO/IEC 42001:2023

EU Regulatory framework on AI (overview)

EU AI Act Explorer Article 14: Human oversight

Stanford GSB Shantanu Narayen: Generative AI Won’t Replace Human Ingenuity

At ITKnocks, we are more than an IT consulting company; we’re your strategic partner in business evolution. With a global footprint and a passion for technology, we craft innovative solutions, ensuring your success. Join us on a journey of excellence, where collaboration meets cutting-edge IT expertise.